When you are customizing wso2 products, changing its features and behaviors you might face a situation that you want to change the features provided in Carbon Management Console (Web-based user interface). And sometimes you might want to remove certain features provided default in the product.

Simple Standard Solution

In such a situation what we can simply do is removing/commenting the defined features artifacts in <product-x>/modules/p2-profile-gen/pom.xml in the product source.

In this scenario you have to keep in mind that most of the features installed in wso2 products are packed as 2 separate features namely UI Feature & Server Feature. So suppose you only want to remove the UI components provided for a certain feature, but its functionalities are still used in the server side back-end. So in such a this situation you can remove the definition of that feature's UI component in the above mentioned pom.xml file.

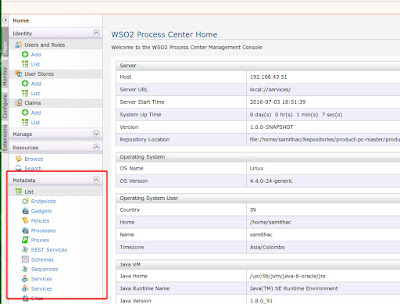

Use Case Example: You want to remove the "Meta Data" menu panel item from the management console home page.- Main section, as squared (in red) in the below figure.(Figure 1), as it is not required to your product or its usage.

You identify that it is installed by org.wso2.carbon.governance.metadata.ui.feature. This feature is packed along with org.wso2.carbon.governance.metadata.server.feature and installed as a single feature, called org.wso2.carbon.governance.metadata.feature. (not specializing 'ui' or 'server' indicates that both of them are installed as a whole) So if you want to remove the UI feature only, what you have to do is define only the Server feature.

So in the pom file,

1)replace,

<featureArtifactDef>

org.wso2.carbon.governance:org.wso2.carbon.governance.metadata.feature:${carbon.governance.version}

</featureArtifactDef>

with,

<featureArtifactDef>

org.wso2.carbon.governance:org.wso2.carbon.governance.metadata.server.feature:${carbon.governance.version}

</featureArtifactDef>

and,

2)Replace,

<feature>

<id>org.wso2.carbon.governance.metadata.feature.group</id>

<version>${carbon.governance.version}</version>

</feature>

with,

<feature>

<id>org.wso2.carbon.governance.metadata.server.feature.group</id>

<version>${carbon.governance.version}</version>

</feature>

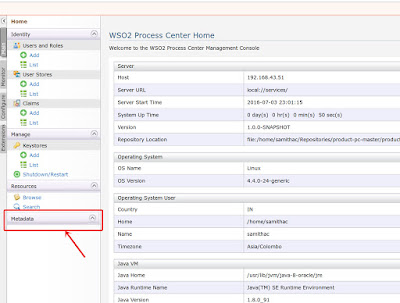

Then rebuild the product and start the server and check. That feature related ui components (icons, menus, panels) should have been removed from the management console. But this solution is not 100% successful. While trying above example too, eventhough the feature icons under the MetaData menu items are removed, still the MetaData Collapisble Menu Heading is existing, as displaed in the below figure (Figure 2). That should be because the related server feature is still existing.

And this solution is also not always applicable. Another feature installed into the product might be dependent on this feature. So we cannot remove the certain feature. But don't worry, there is an other solution left too.

Complex Solution by replacing the component.xml

The appearance of the menu panel of Carbon Management Console web interface is defined in the <product-distribution-package-home>/repository/resources/component.xml. in the built product distribution package zip. The displaying menu items are defined as <menu> elements in this xml files. So we have to remove the related element and its child elements.

In our example there were 2 sub-elements of the "metadata-menu" element.

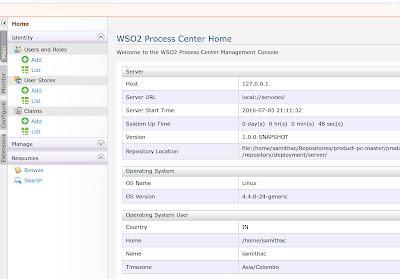

So just remove or comment out those elements and restart the server. You will see that our objective is completely successful now. Even the left over MataData menu heading is removed now, as in the below figure (Figure 3).

But this solution is not appropriate for a developer. You will have to edit that component.xml file each time you build the product. But don't worry. There is a solution. You can say your distribution pom (<product-home>/modules/distribution/pom.xml) to replace that component.xml file in the distribution product zip, with a predefined file, in which those non-required elements are removed or commented out.

This pom file does the job of packaging the final product zip. So there should be configurations on how the final product zip related directory is copied into modules/distribution/target folder. At there you can say to the maven-ant-run plugin to replace that component.xml with a predefined file which is placed somewhere in the product source by us.

Following is how this task is done in the WSO2 Process Center pre-release M2 version.

The related section in unchanged distribution pom file

The related section changed for the required objective in distribution pom file

That is all. Rebuild your product and restart the server and check how you have succeeded with your objective.

Simple Standard Solution

In such a situation what we can simply do is removing/commenting the defined features artifacts in <product-x>/modules/p2-profile-gen/pom.xml in the product source.

In this scenario you have to keep in mind that most of the features installed in wso2 products are packed as 2 separate features namely UI Feature & Server Feature. So suppose you only want to remove the UI components provided for a certain feature, but its functionalities are still used in the server side back-end. So in such a this situation you can remove the definition of that feature's UI component in the above mentioned pom.xml file.

Use Case Example: You want to remove the "Meta Data" menu panel item from the management console home page.- Main section, as squared (in red) in the below figure.(Figure 1), as it is not required to your product or its usage.

|

| Figure 1 |

You identify that it is installed by org.wso2.carbon.governance.metadata.ui.feature. This feature is packed along with org.wso2.carbon.governance.metadata.server.feature and installed as a single feature, called org.wso2.carbon.governance.metadata.feature. (not specializing 'ui' or 'server' indicates that both of them are installed as a whole) So if you want to remove the UI feature only, what you have to do is define only the Server feature.

So in the pom file,

1)replace,

<featureArtifactDef>

org.wso2.carbon.governance:org.wso2.carbon.governance.metadata.feature:${carbon.governance.version}

</featureArtifactDef>

with,

<featureArtifactDef>

org.wso2.carbon.governance:org.wso2.carbon.governance.metadata.server.feature:${carbon.governance.version}

</featureArtifactDef>

and,

2)Replace,

<feature>

<id>org.wso2.carbon.governance.metadata.feature.group</id>

<version>${carbon.governance.version}</version>

</feature>

with,

<feature>

<id>org.wso2.carbon.governance.metadata.server.feature.group</id>

<version>${carbon.governance.version}</version>

</feature>

Then rebuild the product and start the server and check. That feature related ui components (icons, menus, panels) should have been removed from the management console. But this solution is not 100% successful. While trying above example too, eventhough the feature icons under the MetaData menu items are removed, still the MetaData Collapisble Menu Heading is existing, as displaed in the below figure (Figure 2). That should be because the related server feature is still existing.

|

| Figure2 |

And this solution is also not always applicable. Another feature installed into the product might be dependent on this feature. So we cannot remove the certain feature. But don't worry, there is an other solution left too.

Complex Solution by replacing the component.xml

The appearance of the menu panel of Carbon Management Console web interface is defined in the <product-distribution-package-home>/repository/resources/component.xml. in the built product distribution package zip. The displaying menu items are defined as <menu> elements in this xml files. So we have to remove the related element and its child elements.

In our example there were 2 sub-elements of the "metadata-menu" element.

<menu>

<id>metadata_menu</id>

<i18n-key>component.metadata</i18n-key>

<i18n-bundle>org.wso2.carbon.i18n.Resources</i18n-bundle>

<parent-menu></parent-menu>

<link>#</link>

<region>region3</region>

<order>30</order>

<style-class>home</style-class>

</menu>

<menu>

<id>list_sub_menu</id>

<i18n-key>modulemgt.list</i18n-key>

<i18n-bundle>org.wso2.carbon.i18n.Resources</i18n-bundle>

<parent-menu>metadata_menu</parent-menu>

<link>#</link>

<region>region3</region>

<order>5</order>

<icon>../images/list.gif</icon>

<style-class>home</style-class>

<require-permission>/permission/admin/manage/resources/govern/metadata/list</require-permission>

</menu>

<menu>

<id>add_sub_menu</id>

<i18n-key>modulemgt.add</i18n-key>

<i18n-bundle>org.wso2.carbon.i18n.Resources</i18n-bundle>

<parent-menu>metadata_menu</parent-menu>

<link>#</link>

<region>region3</region>

<order>10</order>

<icon>../images/add.gif</icon>

<style-class>home</style-class>

<require-permission>/permission/admin/manage/resources/govern/metadata/add</require-permission>

</menu>

So just remove or comment out those elements and restart the server. You will see that our objective is completely successful now. Even the left over MataData menu heading is removed now, as in the below figure (Figure 3).

|

| Figure3 |

But this solution is not appropriate for a developer. You will have to edit that component.xml file each time you build the product. But don't worry. There is a solution. You can say your distribution pom (<product-home>/modules/distribution/pom.xml) to replace that component.xml file in the distribution product zip, with a predefined file, in which those non-required elements are removed or commented out.

This pom file does the job of packaging the final product zip. So there should be configurations on how the final product zip related directory is copied into modules/distribution/target folder. At there you can say to the maven-ant-run plugin to replace that component.xml with a predefined file which is placed somewhere in the product source by us.

Following is how this task is done in the WSO2 Process Center pre-release M2 version.

The related section in unchanged distribution pom file

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-antrun-plugin</artifactId>

<executions>

<execution>

<id>3-extract-docs-from-components</id>

<phase>package</phase>

<goals>

<goal>run</goal>

</goals>

<configuration>

<tasks>

<property name="tempdir" value="target/docs-temp" />

<property name="jardir" value="target/jars" />

<mkdir dir="${tempdir}" />

<unzip dest="${tempdir}">

<fileset dir="target">

<include name="${project.artifactId}-${pc.version}.zip" />

</fileset>

</unzip>

<copy todir="target/wso2carbon-core-${carbon.kernel.version}/repository/components" overwrite="true">

<fileset dir="${tempdir}/${project.artifactId}-${pc.version}/repository/components">

</fileset>

</copy>

<delete file="target/${project.artifactId}-${pc.version}.zip" />

<delete dir="${tempdir}" />

</tasks>

</configuration>

</execution>

The related section changed for the required objective in distribution pom file

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-antrun-plugin</artifactId>

<executions>

<execution>

<id>3-extract-docs-from-components</id>

<phase>package</phase>

<goals>

<goal>run</goal>

</goals>

<configuration>

<tasks>

<property name="tempdir" value="target/docs-temp" />

<property name="jardir" value="target/jars" />

<mkdir dir="${tempdir}" />

<unzip dest="${tempdir}">

<fileset dir="target">

<include name="${project.artifactId}-${pc.version}.zip" />

</fileset>

</unzip>

<copy todir="target/wso2carbon-core-${carbon.kernel.version}/repository/components" overwrite="true">

<fileset dir="${tempdir}/${project.artifactId}-${pc.version}/repository/components">

</fileset>

</copy>

<mkdir dir="${tempdir2}" />

<unzip

src="${tempdir}/${project.artifactId}-${pc.version}/repository/components/plugins/org.

wso2.carbon.ui.menu.governance_${carbon.kernel.version}.jar"

dest="${tempdir2}" />

<copy file="src/repository/resources/component.xml" toDir="${tempdir2}/META-INF/"

overwrite="true"/>

<zip destfile="org.wso2.carbon.ui.menu.governance_${carbon.kernel.version}.jar"

basedir="${tempdir2}" />

<copy file="org.wso2.carbon.ui.menu.governance_${carbon.kernel.version}.jar"

toDir="target/wso2carbon-core-${carbon.kernel.version}/repository/components/plugins/" overwrite="true" />

<delete file="target/${project.artifactId}-${pc.version}.zip" />

<delete dir="${tempdir}" />

<delete dir="${tempdir2}" />

<delete file="org.wso2.carbon.ui.menu.governance_${carbon.kernel.version}.jar"/>

</tasks>

</configuration>

</execution>

Note that the component.xml file created by us (where those non-required xml elements are removed) is placed in src/repository/resources/ and what we are doing here is overwriting the original file with this file.That is all. Rebuild your product and restart the server and check how you have succeeded with your objective.